One of the most important milestones for babies is the ability to engage in joint attention – the shared focus of two individuals on an object. However, it is unclear how babies develop this skill. An essential ability that babies need to have in order to engage in joint attention is gaze-following: being able to look where someone else is looking.

There are two main theories for how gaze-following develops. The first of these assumes that babies are born understanding that people want to communicate with them, and therefore that they instinctively follow adults’ gaze to look at what is being communicated about (1). The second of these assumes that instead, babies might be able to learn to follow gaze without understanding anything – just through interacting with the people around them (2). The way this would work is that because adults tend to look at interesting things, then when babies look in the ‘right’ place (even if it’s just by chance), they would be rewarded by getting to see the interesting thing. This positive reinforcement would make babies more likely to look in the right place next time.

In order to test the learning theory of gaze-following in babies, roboticists from the National Institute of Information and Communications Technology, Japan, created a robot that had the task of learning to look at an object that a human experimenter was also looking at (3). The robot had no understanding of communicative intention: it just moved its head randomly at first, and was told when it got it wrong, making it less likely to move its head in that way next time. Over time, the robot learned how to follow the experimenter’s gaze! This was a very important finding, because it suggested that even without understanding that adults want to communicate with them, babies might learn to follow their parents’ gaze just by learning that adults often look at interesting things.

Interestingly, the robot learned to follow gaze first in the horizontal plane (left and right), before the vertical plane (up and down). This was because the images it was getting (of the adult looking in many directions) were more perceptually variable (looked more different from each other) in the horizontal than the vertical plane. This raised an intriguing question: do babies learn to follow gaze first in the horizontal plane?

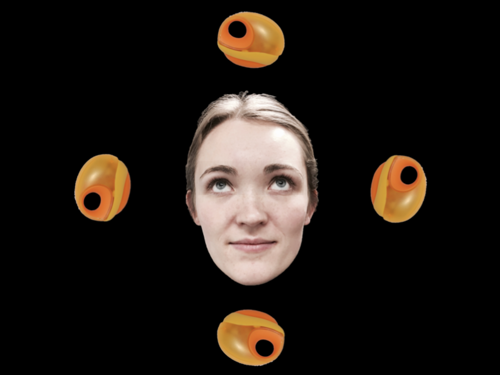

We decided to test this prediction by seeing how accurate babies were at horizontal and vertical gaze following. To do this, we made a series of videos of an experimenter looking up, down, left and right at an interesting toy. We showed these videos to 12-month-old babies, an age group who are typically just beginning to learn to follow gaze. Just as the robot predicted, we found that 12-month-old babies were better at horizontal than vertical gaze following - and in fact, could only follow gaze at all in the horizontal direction. We then did the same study with 6-month-old babies, and showed that they could not follow gaze, in either the horizontal or vertical direction.

So, we were able to show that (like the robot), babies go from being unable to follow either horizontal or vertical gaze, to being able to follow just horizontal gaze. However, what happens next? We’re now testing 18-month-old babies, hoping to show that at this age the babies are able to follow both horizontal and vertical gaze (as the robot was eventually able to learn both).

This study is an exciting example of how developmental robotics (the study of robots that can learn) can help infant psychologists develop new research questions, in order to test and refine theories about how babies learn.

This work forms part of Priya Silverstein’s doctoral research and will be presented at the 9th Joint IEEE International Conference on Development and Learning and on Epigenetic Robotics (ICDL-EPIROB), Oslo, Norway, and at the 2019 Lancaster International Conference on Infancy and Early Development (LCICD), Lancaster, UK.

Acknowledgements:

Huge thanks to all the parents and babies who took part – we couldn’t do it without you! P. Silverstein is funded by a Leverhulme Trust Doctoral Scholarship [DS-2014-014]. This work was supported by the International Centre for Language and Communication Development (LuCiD) at Lancaster University and University of Manchester, funded by the Economic and Social Research Council (UK) [ES/L008955/2].

Further information:

For the version of the manuscript accepted at ICDL-EPIROB, and all materials, data, code, and pre-registration, please see here: https://osf.io/fqp8z/.

References:

(1) Csibra, G., & Gergely, G. (2009). Natural pedagogy. Trends In Cognitive Sciences, 13(4), 148-153. doi: 10.1016/j.tics.2009.01.005

(2) Triesch, J., Teuscher, C., Deak, G., & Carlson, E. (2006). Gaze following: why (not) learn it? Developmental Science, 9(2), 125-147. doi: 10.1111/j.1467-7687.2006.00470.x

(3) Nagai, Y., Asada, M., & Hosoda, K. (2006). Learning for joint attention helped by functional development. Advanced Robotics, 20(10), 1165-1181. doi: 10.1163/156855306778522497